Tuesday, September 16, 2008

Users, Audiences, and Design

Doyle's Sherlock Holmes could deduce a variety of things -- like a person's profession, where he or she'd been recently, even his or her unspoken secrets -- by a variety of cues. It is an exaggeration, of course, and reflects a variety of Victorian clichés and prejudices (do criminals have a particular ear shape?), but it remains plausible to readers because of a basis in truth: even as we use our tools to shape our surroundings, our tools shape us. For HCI, there are some implications -- given the data, we may spot, for example, a Mac user using a borrowed PC. And, if we are effective designers, we use the foresight granted by reason and experience to envision the different ways different users may use our designs.

DILLON & WATSON

Dillon and Watson report that "in current situations this often means distinguishing users broadly in terms of expertise with technology, task experience, educational background, linguistic ability, gender and age." There are other models, and they cite Nielsen's and Booth's examples, before revealing their purpose: to consider and “psychology of individual differences" as it applies to design and user training.

We get an abbreviated history of psychometrics and definitions of various approaches. In theory, I'm very interested, but these are the sorts of tests and measurements I tend to question.

Differential psychology sets out to look for and quantify difference in ways that are seldom positive for everyone in the sample, and generally presumes that something like intelligence is constant -- that a measurement taken today (using a questionable instrument) will have some value tomorrow. Consider the list on page 6, and imagine we are measuring your broad cognitive speed today (surprise! It'll be fun, except for the part later where we divide top scorers from the others and give them all the ice cream). Are you ready? Going to enjoy getting this number? Think it might be better if you'd slept more? That's not part of the theory ... here, wear this pointy 'I am special' hat. Incidentally, these authors spend a lot of time saying things like "this ... has been highly praised by many reviewers" -- I want to know their names, and disagree that this is not a contentious issue. The number of factors and the way these are organized is not the primary distinction between theories except in a very narrow field of inquiry.

Similar criticisms of the other sections are possible. Cognitive psychology, which "originally tends to be more interested in general models of processes than in individual differences”, is a little less noxious on the surface. It is here, however, greatly simplified and doesn't take into account any of the new data from new tools that allow the measurement and visual representation of neurological activity during cognitive tasks. These authors are focused on identifying some key terms and correlating them to terms in HCI, i.e. latency and speed. Personality and cognitive style are interesting concepts, but as easy to misuse as they are to use -- imagine if your computer operating system tried to 'help' you based on what it perceived as your neuroticism or extraversion indexes. The result could easily be something like Clippie the paperclip in Office ("it looks like you're writing a letter ..."). The psychology might attract funding from investors, but success in the marketplace would depend on user perception, and many users, despite their need for help, are sensitive to anything like condescension.

Psychomotor differences are relevant, but Thorndike's research (which suggested practice does not make perfect at all) isn't particularly applicable. Yes, some people are better at some things ... so?

As you may guess, I disagree with some of the six "practical implications of 100 years of differential psychology." I wrote out and deleted a line by line rant here. These hinge on the assumption in #1 that "a core number of basic abilities have been reliably and validly identified," something I don't believe the evidence presented here supports. I want to see that happen -- I want to see the promise of improvements for all users fulfilled -- but there are some major concerns. A "more rigorous theoretical and data-driven approach" could be good, or it could simply validate various preconceptions about the end user and his or her capacities, counterproductive if the goal is to actually improve user experience for all.

The presumption that low-visual-ability users will need help to perform as well as high-visual-ability users could result in a situation in which the interface is slower and more obnoxious for the very people we are trying to serve better.

"25% of variance on the basis of ability alone" is a made up number with no practical utility whatsoever ... the authors hedge their bets here, saying it shouldn't be the "end of the story." That is correct, gentlemen. It is not.

Though I've become antagonistic of the article, I do agree that analysis of individual difference is useful, that more study is warranted and necessary, and that we can gain a better understanding of the people we design for. I even think psychology could be useful to that end, provided we very carefully consider our established assumptions.

LIGHT & WAKEMAN

All of us recognize the value of the positive individual experience with a website. The trick is to arrive at implementable suggestions likely to improve user experience across the board. The master trick involves documenting the reasons for your suggestions in a manner than demonstrates their validity in context, with real users.

Light and Wakeman provide their methodology, appear more sensitive to user concerns, and their recommendations seem far more logical and supportable. Where Dillon and Watson are sort of saying 'psychology is useful, here are some theories', Light and Wakeman are actually getting somewhere.

I may like their recommendations more because each appears to show the user the deference and respect he or she is due. Respect is a very useful term in this case. I'd suggest that it is possible to attempt to show respect for a wide variety of people without necessarily measuring them against an abstract standard (with or without agreed upon metrics).

MENTAL MODELS (PAYNE) AND CONCLUSIONS

Let's think about Sherlock Holmes a little more. His deductions are more accurate than is likely in part because of the structure and content of his society, a simplified representation of Victorian England, and in part because he is fiction and the author who created him will bend the entirety of the representations to grant his creation the solution to the problem at hand. In reality, even granted extraordinary powers of reason, technical skill, and an overwhelming desire to find a solution (things we don't all always have), we have difficulty perceiving things other than what we expect to perceive. We have certain things in common with all humans, yes, but we are also the product of the extensive series of events that led us to this point, events that may not necessarily have prepared us to understand end users who are very different from ourselves.

As Payne suggests, the position that "mental models are essentially analog, homomorphic representations" is a strong one. In other words, we create mental models, maps between cause and effect, that are similar in form to the actual causalities but not necessarily the same thereas. Maps are useful up to a point, but they may leave out a great deal of information that could be useful depending on the type of problem we are trying to solve.

We have, in effect, a mental model of the user that takes into account what we expect from him or her, reliable only to the extent of our existing knowledge, replete with the assumptions necessary for our forward progress towards the design objective, but not necessarily accurate as to every detail. The map is useful, but it is not the same as the territory.

Likewise, the user forms a model of our design, expecting certain results based on certain actions, without necessarily cultivating an understanding of the underlying structure and origin of said results. Even if there is a great Author watching over us, it's unlike that She will bend the fabric of all that is to make others as we expect them to be, as Doyle did for Holmes.

Therefore, whatever the underlying theory, it is better to set aside preconceptions at a certain stage of design to actually observe users in realistic contexts, as Light & Wakeman report they do. Of course, we can take a variety of shortcuts based on the observations and experiences of others who've come before -- 'stand on the shoulders of giants' (provided you trust them).

When there are design choices that cannot be evaluated with test groups to the extent that we might like, or a lack of feedback for some other reason, we can choose to err on the side of respect for the user when that is possible. We will, of course, sometimes show respect in ways that would be appropriate for us individually but aren't necessarily going to work for the audience -- all we can do is try, and do the best we can based on the information we have, within the parameters available (time, space, budgets).

The multiple presentation principle, for example, gives the designer a little more work, but demonstrates respect for the possibility that different people may learn or process differently. We do the work, and minimize the burden on the user. Of course, NOT giving someone work is, in some contexts, not respectful at all, and we get to this in the theory of not doing: "minimizing the mental effort of learners is not necessarily or always a good instructional strategy."

It means that, in design, as in other forms of writing, an understanding of the audience and of our purpose(s) is highly valuable. As we broaden the purposes of our designs, we need a broader understanding of our audiences -- not to categorize them more broadly, but rather to consider their ranges of needs and expectations.

Traditional design overrelies on the normative effects of a standardized stimuli, creating problems for users who perceive or think about that stimuli in ways that diverge from the mean. We have the option of making that same mistake over and over, or of trying something that might make more work for us and, there is a chance, might not work quite as we expect. We weigh the costs and benefits of designing with the success of all end users in mind.

What is the Legacy of the Memex?

In 1945, Vannevar Bush, the director of the Office of Scientific Research and Development wrote an article for The Atlantic Monthly called "As We May Think." During the war, Bush had been in charge of coordinating the research of six thousand American scientists as they attempted to apply their expertise to the battlefield. However, with the war at a close, Bush clearly crafted the paper for a civilian audience. Bush's main point is that we have accumulated a giant body of research, but we have not figured out how to organized knowledge so that it is immediately accessible. With the war over, Bush asks, "What are the scientists to do next?" (1). "As We May Think" is Bush's answer to this question. His foresight was remarkable. He essentially sketched out what we now call the personal computer, the internet, Google and Web 2.0 all wrapped up in one, imaginative concept he called the "Memex." Bush and his Memex influenced many of the luminaries in the fields of computer science and human-computer interaction (HCI), therefore, how can we characterize the impact of the Memex?

What is a Memex?

Influence of The Memex

Stanford Research InstituteMenlo Park, California

May 24, 1962

Dr. Vannevar Bush

Professor Emeritus

Massachusetts Institute of Technology

Cambridge, Massachusetts

Dear Dr. Bush:

I wish permission from you to extract lengthy and definitely acknowledged quotes from your article, "As We May Think," that appeared in The Atlantic Monthly, July, 1945. These quotes would appear in a report that I am writing for the Air Force Office of Scientific Research, and I am sending a parallel request to The Atlantic Monthly.

For your information I am enclosing a relatively brief and quite general writeup describing the program that I am trying to develop here at Stanford Research Institute. The report which I am writing (and for which I am requesting quotation permission from you) is a detailed description of the conceptual structure that I have developed over the years to orient my pursuit of this objective of increasing the individual human's intellectual effectiveness. It will also contain a number of examples of the way in which new equipment can lead to new methods and improved effectiveness, to illustrate my more general (but non-numerical) framework, and your article is quite the best that I have found in print to offer examples of this.

I might add that this article of yours has probably influenced me quite basically. I remember finding it and avidly reading it in a Red Cross library on the edge of the jungle on Leyte, one of the Phillipine [sic] Islands, in the Fall of 1945.

Engelbart goes on to say:

I re-discovered your article about three years ago, and was rather startled to realized how much I had aligned my sights along the vector you had described. I wouldn't be surprised at all if the reading of this article sixteen and a half years ago hadn't had a real influence upon the course of my thoughts and actions (Nyce and Kahn 235).

In this paper, an effort in counter-discipleship, I hope to remind readers of what Bush did and did not say, and point out what is not yet recognized: that much of what he predicted is possible now; the Memex is here; the 'trails' he spoke of--suitably generalized, and now called hypertexts--may, and should, become the principal publishing form of the future (Nyce and Kahn 245).

Our team at Microsoft Research has begun a quest to digitally chronicle every aspect of a person's life, starting with one of our own lives (Bell's). For the past six years, we have attempted to record all of Bell's communications with other people and machines, as well as the images he sees, the sounds he hears and the Web sites he visits--storing everything in a personal digital archive that is both searchable and secure. (MyLifeBits)

Digital memories can do more than simply assist the recollection of past events, conversations and projects. Portable sensors can take readings of things that are not even perceived by humans, such as oxygen levels in the blood or the amount of carbon dioxide in the air. Computers can then scan these data to identify patterns: for instance, they might determine which environmental conditions worsen a child's asthma...After six years, Bell has amassed a digital archive of more than 300,000 records, taking up about 150 gigabytes of memory (1).

Is this the "fulfillment" of Bush's vision, as the creators suggest, or is this a misguided corruption? What are the possible emotional pitfalls of human-life-as-database? If we are not worried about the impact on ourselves then we should ask what our loved ones will do with all this digital stuff once we are gone. In short, if the dream of the Memex is embodied in MyLifeBits, shouldn't someone ask whether or not this is a good thing? The creators of the project begin their article by stating "Human memory can be maddeningly elusive" (1). They go on to argue that, "digital memories allow one to vividly relive an event with sounds and images, enhancing personal reflection in much the same way that the Internet has aided scientific investigations. Every word one has ever read, whether in an e-mail, an electronic document or on a Web site, can be found again with just a few keystrokes" (1).

The assumption behind this statement is that humans want to remember everything, that somehow letting memories slip away is a problem. Don't we need to let certain memories fade? Isn't the fading of memory part of the healing and learning process? If I can call up every mistake I have ever made in text or video or audio, I am fairly certain I would go mad. Bush proposed Memex with the goal of organizing knowledge. MyLifeBits challenges our idea of knowledge, or at least, self-knowledge. I do realize that I possess a fairly large archive of email, dating back probably ten years now. And I know that I rarely consult this archive. However, what if that archive were more fully integrated into my life? For example, what happens when our personal history records are merged with everyday knowledge searches? Google has already stated that they hope to integrate historical newspapers with normal searches, so that your results turn up, essentially, everything (Soni). Desktop searching has improved dramatically as well, with technologies like Apple's Spotlight constantly indexing every file on your computer. Will we be able to move forward emotionally when our multimedia past is so accessible? How will grieving family members deal with fifty years of email, text messages and phone conversations. The dream of the Memex, as embodied in MyLifeBits and supported by a confluence of other technologies (Google, cheap storage, light and powerful digital camcorders, wide access to broadband and so forth) is not just changing our relationship to our present, but it has the potential to dramatically change our relationship with our past. Was this what Vannevar Bush hoped for, or have we misinterpreted his dream? Perhaps it is not a question worth asking. Like tree roots growing around a large rock, the technology will always find a way to build itself, despite any objections or obstacles. However, it is our responsibility to take responsibility for our inventions. Computers have evolved from the distant, impersonal room-sized mystery boxes, to the personal computer and now we are on the brink of intimate computing.

Conclusion

Works Cited

Bush, Vannevar. "As We May Think." The Atlantic Monthly July 1945

Monday, September 15, 2008

Interactivity, The Video Game, and Chris Crawford

Historical Perspective Essay ~Matt Rolph

You float unsupported on the edge of darkness. Across the void, your mission objective: the flickering lights of the enemy. As you glide into your attack, their fire leaps out towards you, missing, missing, missing, and then – just as you near the goal – a direct hit. In that final fraction of a second, as an explosion renders around you, you wonder whether this is all there is or whether there can be something more. And then it’s over.

Of course, the space invader, the asteroid, and all the other creatures of their kind, are not made to wonder. They do not reflect on the nature of their existences. Their makers, on the other hand, have the luxury of philosophy. This has been the case since the beginning of computer driven games, an important part of the history of human-computer-interaction, but there have been precious few game makers who have questioned the repetitive aspects of game design, and none who have dared to do so more vocally than Chris Crawford, “dean of American game design.” To consider his complaints, it’s useful to look briefly at the history of computerized games, Crawford’s own career, and weigh some related concepts in HCI.

First, a definition of the computerized game: In the standard ‘video game’, the computer-managed, program-driven game field (sometimes all of it, sometimes a portion of it at any given time, or, in a text based example, a description of it, though we will deal only minimally with text-based games) is represented on graphical display. The player interacts with the game by means of an input device such as a paddle, joystick, mouse, keyboard, or another specialized device.

In 1958, physicist William “Willy” Higinbotham created a tennis-like game, “Tennis for Two”, displayed on an oscilloscope. He was head of the instrumentation division at Brookhaven National Laboratories in New York, and he meant his creation, which he initially put together from spare parts including an analog computer (not the digital computer his team was building for more serious work), to entertain visitors to the facility. It was the first video game, and somewhat resembled the later and more famous Pong. (Anderson, Hunter, “William Higinbotham”)

Willy … [used old part and an analog computer but] did make use of some recently invented transistors as flip-flop switches--a harbinger of things to come … The screen display was a side view of a tennis court … Each player held a prototypical paddle, a small box with a knob and button on it. The knob controlled the angle of the player's return, and the button chose the moment of the hit … Gravity, windspeed, and bounce were all portrayed … The game was simple, but fun to play, and its charm was infectious. [Higinbotham’s friend and colleague Dave] Potter remembers the popularity of the game: ‘The high schoolers liked it best. You couldn't pull them away from it.’ (Anderson)

The next known game was created by MIT student Steve Russell in 1961, who dubbed it “Spacewar”, and set it up on a Digital PDP-1 (Programmed Data Processor-1) minicomputer. It somewhat resembled the game we’ve come to know as “Asteroids” (Herman).

From the outset, interaction has meant a simulation of control over the motion of a game element around or into other elements. The computer-processed algorithms handled the relatively simple mathematics of collision or the lack thereof, tracking the coordinates of all elements on the field and triggering events when any given element overlapped or became directly adjacent to another. In other respects, games are different from other computer-aided tasks, as universal usability and the accessibility of task completion are not primary considerations (Lazzaro 680). Games typically offer levels or stages of increasing difficulty intended to result in the pre-conclusion termination of the experience for all but the most dedicated and adept players. This is particularly interesting when viewed through the lens of Lev Vygotsky’s social cognition theory, which suggests a ‘zone of proximal development’, a challenge slightly beyond the established capacities of the learner which, by virtue of that property, creates ideal conditions for independent problem solving, for engagement, and for learning, precisely as these games do. Vygotsky, it’s important to note, defined the ZPD as created by humans: “determined through problem solving under [human] adult guidance or in collaboration with more capable peers" (qtd in Berger). The implication is that in presenting a series of ever increasing challenges the video game serves in place of an adult teacher or more capable peer, another reason for adult concern about them, though most complaints focused on the amount of time the games consume and their simulations of violence. In terms of the math, it makes no difference whether the simulated collision involves a representation of a bullet or of a snowflake. The narrative metaphors associated with the games reflected standard themes and shooting was a very popular one. Even for a pacifist, it's hard to miss the appeal of the hyperbole of mythology, the polarization of us/them (or me/them) antagonism, and illusional immediacy of threat elements, all with very simple rules of engagement: fire at will. “Playing games in their discretionary time, gamers mainly play for the emotions the games create … player experience design crafts … cognitive and affective responses in conjunction with user behavior” (Lazzaro 681).

Crawford’s complaints about video game design are more substantive than anti-violence rhetoric. He is, in fact, an avid wargamer and began his programming hobby thinking about how to computerize games like the paper wargame, Blitzkrieg, which he and a friend played in 1966: “… I became an avid wargamer, and from there started thinking of my own designs. When computers became available, I built one and programmed it with a wargame.” (Krupa) Rather, he laments the limited nature of game interactivity and bemoans the repetitive nature of game design.

These days, Chris Crawford is less known for the games he has authored than for his presence in the industry as the pointed voice of reason. He is a reminder, to those who will listen, that maybe, just maybe, there is room for thoughtful and experimental game design in a world where million dollar budgets and months of technological hype, more often than not, result in peculiarly non-interactive titles … (Dadgum)

“In 1979, I got a job at Atari” (Krupa). Crawford was fresh from a job as a community college physics teacher when he started to write games. After initial successes at Atari, he was “promoted to supervise a group that trained programmers about the Atari computers” (Crawford, MobyGames). After Atari collapsed in 1984, Crawford continued his work as a freelance designer and developer, writing for the new Macintosh computer. He also authored 1982’s The Art of Computer Game Design and five other books on the subject (Krotoski) and founded the Journal of Computer Game Design and of the Game Developers Conference (Dadgum). Despite the longevity of his career and the commercial success of some of his titles (Atari 800 titles "SCRAM," "Energy Czar", Avalon Hill's "Legionnaire," "Eastern Front 1941; for the Macintosh, "Balance of Power," "Patton vs. Rommel," and "Patton Strikes Back,") Crawford is better known for controversial statements than for his designs.

In 2006, Chris Crawford was quoted as saying ‘the video game is dead.’ Asked for clarification, he offered “What I meant by that was that the creative life has gone out of the industry. And an industry that has no creative spark to it is just marking time to die” (Murdey).

Before he got to that point, he lamented what he perceived as a fundamental misunderstanding of the concept of interactivity and evinced distaste for the central metaphors of the gaming community:

Unfortunately, the term "interactive" has been so overused that it has lost any meaning other than "get rich quick". We see the term applied to television, theater, cinema, drama, fiction, and multimedia, but the uses proposed belie a misunderstanding of the term. Yet, interactivity is the very essence of this "interactive" revolution. It therefore behooves me to launch the new Interactive Entertainment Design with a straightforward explanation of interactivity. … Part of the problem most people have in understanding interactivity lies in the paucity of examples. Interactivity is not like the movies (although some people who don't understand interactivity would like to think so). Interactivity is not like books. It's not like any product or defined medium that we've ever seen before. That's why it's revolutionary.

Take heart. There is one common experience we all share that is truly, fundamentally, interactive: a conversation. If you take some time to consider carefully the nature of conversations, I think that you'll come to a clearer understanding of interactivity. … This process goes back and forth until the participants terminate it. Thus, a conversation is an iterative process in which each participant in turn listens, thinks, and expresses. (Crawford , Fundamentals)

… I wouldn't be carping about the cinematic metaphor if I didn't have a better one to offer. I propose that we use the conversation as our metaphor for game design. I can imagine the howls of outrage at so prosaic a suggestion. How can I suggest replacing a glamorous, ego-stroking metaphor with a dull, drab one? We all know that people pay good money to see a movie; who would ever pay money to have a conversation? What possible entertainment value can a conversation offer? The answer, of course, is that a conversation has no entertainment value, but the reason why conversations have no entertainment value reveals a great deal. You see, a conversation is necessarily a two-person event. The world's greatest conversationalist could not carry on more than one conversation at a time. Thus, a person gifted with great powers of conversation could never parlay that gift into a marketable entertainment experience, because such conversations could not be shared by more than one person at a time. A singer can sing to thousands at once; an actor can play for millions via television or cinema; a storyteller can share her beautiful stories with countless numbers through print. But the hard reality is that a conversationalist entertainer must always have an audience of one, and it's pretty hard to make money with such a tiny audience. So conversation never developed as an entertainment form. But now we have a technology that eliminates the restriction on the audience. Think of a computer game as a "conversation in a can". Through the medium of the computer, the author interacts with the audience, and that interaction is a direct, one-on-one experience. Yet, we can duplicate the floppies on which the potential conversation is stored, thereby allowing the great conversationalist to reach many people. Suddenly, conversation has become an economically viable form of entertainment!

The true value of this metaphor is that it focuses our attention on the interactive nature of our work. A conversation is one of the few interactive experiences that we already know about. We all have a thorough understanding of the requirements of a good conversation. We can apply that understanding to our computer games to improve them. (Crawford, Conversational Metaphor)

Describing interactivity as conversation sets the bar high, requires incredible advances in artificial intelligence or a masterwork of design that effectively predicts the bulk of the possible player responses. An effective example, with or without commercial success, has yet to be realized.

Even of landmark games like The Sims, Crawford offers little praise:

Will Wright spoke with me just as he began working on The Sims, and I urged him to put interpersonal relationships in. He chose not to. … The Sims is a swig of water to a dying man in the desert. It really doesn't offer that much that's interpersonal, but the games industry has been so utterly devoid of it that even the slight whiffs of it you get in The Sims set people on fire. The Sims isn't about people, it's a housekeeping sim. It's consumerism plus housekeeping. It works, it's certainly better than shooting, and that's its success. But interpersonal interaction is not about going to the bathroom. It's much much more. The Sims is ultimately a cold game. The interactions people have, have a really mechanistic feel. (Krotoski)

Crawford also ascribes the lack of success with the female demographic to the lack of social reasoning in games:

[What is the essence of interactivity?] It's the choices, giving people lots of interesting choices. Actually, that's Sid Meier's definition of a good game too. It's just that you have to give choices that are intrinsically interesting, and that's why we have so intrinsically failed to address the female market. They are not interested in choices involving spatial reasoning and guns. Social reasoning is one of the primary entertainment impulses for women. You know, figuring out who likes whom, allies and enemies and that sort of thing. That's a major part of a woman's psychological or emotional life. That's what we should be doing. However it's a lot more complicated. It's easier to measure the trajectory of a bullet, but chasing people's feelings is a much more difficult job. (Krotoski)

Around the time Crawford was not quite exactly saying the video game is dead, there were a variety of innovations in the industry, including the advent of the Wii, hailed as a revolution in interactivity. To Crawford, though, a new controller didn’t represent a major change:

GS: Continuing with the Nintendo theme, do you feel that the Wii in is a step in the right direction as far as innovation? Or do you think it's going to be the same old stuff only with a fancy new controller?

CC: More likely, the latter. I'm not a fortune teller. I don't know what they'll do. But I think that it is reasonable to expect that an industry that hasn't produced any innovation in at least a decade is unlikely to change its spots. (Murdey)

Crawford’s own interactive storytelling project, the “Erazzmatron”, a decade or more in development, to be marketed as Storytron (http://www.storytron.com/), is in its infancy with an uncertain future ahead. Crawford chose the original project name and his domain name, erasmatazz.com, as a tribute to Desiderius Erasmus of Rotterdam, a precursor to Martin Luther who pointed out the flaws of the Roman Catholic Church with intellectual rigor and acumen but did so while remaining a Catholic. If the game design establishment is like a great church, then perhaps Crawford is very like an Erasmus within it: calling for change, encouraging scrutiny of prevailing wisdom, but nevertheless remaining within rather than ‘lighting out for the territories’ (as Huck Finn might) and rejecting video games and their design entirely.

Whether Crawford is prophetic – foretelling the course games are to take, or, at least, one course they might take once the technology catches up with his vision – or whether he is simply a voice crying in the wilderness – an alternative theorist lamenting his once an insider, now an outsider status – he raises some interesting points regarding the nature of innovation in HCI.

GS: So you'd call yourself a pessimist on this front.

CC: Yeah, I think that’s valid. Hey, maybe they'll surprise us. But I've been preaching this sermon for 20 years now…more than that, in fact, and I haven't seen any serious attempt to move in that direction. Indeed, I hear all sorts of arguments as to why “we don't need to change our spots, we’re doing just fine the way we are.” And in fact, and this is a fundamental point, nobody changes unless they're in pain. And the industry is not in pain. So it's going to keep doing the same thing until it hurts. (Murdey)

There is a point at which the preservation of the status quo, the defense of the investment to date and the initial direction, outweighs anything promised by innovation. In theory, a variety of forces act on the developers of a technology to motivate them to innovate and improve upon existing technology; in practice, the industry moves in directions suggested by existing successes and investment in unproven alternatives is less likely. There is a risk that, to preserve the metaphors and prevailing thinking that has brought us this far, American HCI will follow the example of the American automobile industry and continue to sell sport-utility-class vehicles when an innovation – improved fuel efficiency, for example – could create new demand and address problems which, though currently of modest concern, will eventually become critical. When there is pain – a spike in gasoline prices, to continue the metaphor – the public demand for alternative solutions grows, but the pushing off the development of alternative solutions to that point when the cries become audible in the marketplace means that the end users of a technology will be disappointed, as an alternative solution will not be ready to deploy until years after the initial perception of crisis.

The game design industry can continue to improve upon the graphics, but so long as they rely on the same mechanisms of interactivity, whatever the input device, and cling to the same modalities and prevailing metaphors, they risk building essentially the same things over and over again, disappointing the existing gaming community and attracting less than the optimal number of new users. Attempting to standardize the appetites of the marketplace may work for a time, but can it work forever? Will there be something more?

AIGameDev.com. “What if game AI had been solved …” Forum discussion thread. 11 Sep 2008. 14 Sep 2008. http://forums.aigamedev.com/showthread.php?p=4798

Anderson, John. “Who Really Invented The Video Game?” Creative Computing Video & Arcade Games. I: 1, Spring 1983, p. 8. 14 Sep 2008. http://www.atarimagazines.com/cva/v1n1/inventedgames.php

Berger, Arthur Asa. Video Games: A Popular Culture Phenomena. Transaction Books, 1993. p. vii. 14 Sep 2008. http://books.google.com/books

Berger, A.E. “Vygotsky’s Zone of Proximal Development.” Encyclopedia of Educational Technology. Bob Hoffman, ed. SDSU Department of Educational Technology, 1994-2008. 13 Sep 2008. http://coe.sdsu.edu/eet/articles/vygotsky_zpd/index.htm

Crawford, Chris. Art of Computer Game Design, The. 1982. Washington State University. 14 Sep 2008. http://www.vancouver.wsu.edu/fac/peabody/game-book/Coverpage.html

Crawford, Chris. “Conversation: A Better Metaphor for Game Design.” Journal of Computer Game Design. VI. 1993. 14 Sep 2008. http://www.erasmatazz.com/library/JCGD_Volume_6/Conversational_Metaphor.html

Crawford, Chris. “Developer Bio.” MobyGames. 18 Aug 2007. 13 Sep 2008. http://www.mobygames.com/developer/sheet/view/developerId,744/

Crawford, Chris. “Erasmatazz: Interactive Storytelling.” 2008. 14 Sep 2008. http://www.erasmatazz.com/

Crawford, Chris. “Fundamentals of Interactivity.” Journal of Computer Game Design. VII. 1994. 14 Sep 2008. http://www.erasmatazz.com/library/JCGD_Volume_7/Fundamentals.html

Crawford, Chris. On Game Design. New Riders, 2003. 14 Sep 2008. http://books.google.com/books

Dadgum Games. “Halcyon Days: Interviews with Classic Game Programmers: Chris Crawford.” Undated. 14 Sep 2008. http://www.dadgum.com/halcyon/BOOK/CRAWFORD.HTM

Herman, Leonard, Jer Horwitz, Steve Kent, and Skyler Miller. “The History of Video Games.” Gamespot. CNET Entertainment Networks. 2008. 14 Sep 2008.

Hunter, William. “Player 1 Stage 1: Classic Video Game History.” The Dot Eaters. 2000. 14 Sep 2008. http://www.emuunlim.com/doteaters/play1sta1.htm

Krotoski, Alex. “Chris Crawford: The Interview.” The Guardian Unlimited, GamesBlog. Manchester, UK. 2 Feb 2005. 14 Sep 2008. http://www.guardian.co.uk/technology/gamesblog/2005/feb/02/chriscrawford

Krupa, Frederique. “Chris Crawford: Dean of American Game Design.” The Swap Meet: Insider’s Look: Articles & Interviews About 3D Interactive Media. Undated. 13 Sep 2008. http://www.theswapmeet.com/articles/crawford.html

Lazzaro, Nicole. “Why We Play: Affect and the Fun of Games.” The Human Computer Interaction Handbook, 2nd ed. Andrew Sears and Julie Jacko, eds. LEA: New York, 2008. pp. 679-700.

Murdey, Chase. “Video Games are Dead: A Chat with Storytronics Guru Chris Crawford.” Gamasutra. 12 Jun 2006. 14 Sep 2008. http://www.gamasutra.com/features/20060612/murdey_01.shtml

“William Higinbotham.” Wikipedia. Wikimedia Foundation. 9 Aug 2008. 14 Sep 2008. http://en.wikipedia.org/wiki/William_Higinbotham

Sunday, September 14, 2008

History of GUI

- Stuart Card, interface researcher at Xerox PARC

When did the graphical user interface (GUI) become so engrained within our daily lives and society, and why? Surely to some, it was simply the shape of things to come, during a time when users would come to demand more from computers as they became much more personal devices. The eventual mainstream adaptation of computers, as well as the innovations of a select few, ahead of their time, is the ones who were responsible for the wonderful present day adaptations of their initial vision.

Why were the end-users so critical to this development? Rather simply, as the computer industry continued to expand, so too did its base of users. Soon, professionals from many different backgrounds and walks of life would be using computers to fulfill their individual needs. Thus, with a broader audience, it is only natural that in order to maximize both efficiency, as well as the user experience, the usability of computers needed to expand to beyond that of simple text-line interfaces. Human beings are visual learners, and as such, having a machine that placed more focus on graphical interaction and manipulation, was one small step for users, one giant leap for usability.

Long before anything that resembled what we tend to think of today as a GUI, there were a few innovations that sparked interest in the field, and showed to everyone just how useful and leading edge this technology could be. The first of which is the Semi Automatic Ground Environment (SAGE), which was utilized by NORAD from the late 1950’s into the 1980’s, as a program, which was used to track Soviet bombers so that they might be intercepted. Developed by both MIT and IBM for the US Air Force, the program lead to significant advances in interactive, online, and real-time computing. The system even utilized modems so that information could be delivered to subsequent bases, as well as pilots, which were ordered to intercept bombers. Users of the SAGE system, we’re able to make adjustments to radar intercept data on their screens using a light pen. Like many advancements of technology in our society, the SAGE project, a government funded program helped to pave the way for interactive, intuitive, and online computing machines.

A very critical element to early GUIs, involved that of computer assisted drawing (CAD), in which a user is assisted by the computer which is capable of performing complex geometric equations to organize objects and data. Ivan Sutherland, the father of Sketchpad (1963), was a true pioneer. His software, which made excellent use of the recently invented light pen, used an x and y coordinate system, which was capable of receiving input, storing it, and displaying it on a CRT, monitor to be displayed. This was not simply an interaction and interface marvel, it was also a computing marvel, as at this time, most computer programs were still run as batch jobs, and not in real-time.

While video conferencing is nothing new to most users today, it stands to reason, that at a particular point and time, it was a brand new and revolutionary feature. The Augmentation of Human Intellect project, conducted by Doug Engelbart at the Stanford Research Institute, was an incredible innovation in computing and interaction, the likes of which the world had never seen. Inspired by the memex as described by Vannevar Bush in the mid 1940’s, the Augmentation of Human Intellect project was focused on how young adults learned, and featured new technology such as a mouse driven cursor and multiple display windows used to interact with hypertext. In his summary report, Engelbart describes that comprehension is essential for increased capability, thus a large focus on technology, which is easily understood. Furthermore, by 1968, the On-Line System, which was developed as part of Engelbart’s project, would go on to be capable of video conferencing with other users, capable of connecting users over distances.

Completed in 1973, the Xerox Alto (named for being developed at their Palo Alto Research Center), is the grandfather of modern GUI as we know it. However, the Alto was not originally designed to be a device for “standard desktop use”, however, as software was developed for it, the doors of usability and opportunity began to expand for it. The Alto bolstered a 606*808 tall monochrome bit-mapped video display, a 3-button mouse and a total address space of 64 K 16-bit words, however, using memory bank selection, a total of 256 K was possible.

During its inception, these features were revolutionary, and as such, allowed Xerox to attempt revolutionary things. Its command line program called Alto Executive was capable of loading programs both locally, and across networks with the help of Net Executive. For file manipulation, the Alto had a GUI file manager called Neptune Directory Editor, which allowed the user to use the various buttons on the mouse to manipulate files and directories without the need to type commands into a command line interface. The Alto also had a basic word processing program called Bravo, as well as a paint program called Draw. These programs also made use of the GUI functionality available, further eliminating the need to type archaic commands to accomplish one’s goals. The programming language SmallTalk was also developed at the Palo Alto center for the Alto computer. This high-level programming language allowed Xerox to create an environment that resembles the modern desktop, with draggable windows, pop-ups, and icons.

In 1981, Xerox commercially released the Xerox 8010 (Star), the Star was Xerox’s only real attempt to enter into the personal computing market. Based upon the designs of the Alto, the Star had much of the same functionality as the Alto. More importantly, in the interest of usability, its designers did their best to adhere to four rules regarding their product: seeing and pointing, progressive disclosure, uniformity in all applications, and what you see is what you get. Although Xerox would never become a major competitor in the personal computing wars to follow, they certainly opened the doors for those who would choose to compete.

Perhaps the most important event in modern computing, took place at the Palo Alto Research Center (PARC), but it was not the development of the Alto computer; it was a visit from Steve Jobs. Then working on the next evolution of computing, using Apple stock, Jobs negotiated with Xerox to bring a small team of Apple developers with him to the PARC to examine some of the research being done at the center. Two new technologies in particular that Jobs and his team were introduced to were the GUI elements of SmallTalk as implemented on the Alto, and Ethernet networking technology. It was said that Jobs was so marveled by the Alto, that he just about ignored everything else he saw at the PARC. Jobs then negotiated to acquire an Alto unit to take back with him to Apple, where his team would use it as an inspiration to change computing.

Released in 1983, the Lisa was, at that time, the pinnacle of personal computing. However, its hefty price tag of nearly ten thousand dollars, lessened its appeal to a larger audience. The Lisa included desktop management very similar to that of today’s Macintosh Finder and Windows Explorer, allowing multiple window instances, and the ability to drag and drop items from one to the next. The Lisa also offered functionality such as a clock, a basic function calculator, and an entire suite of office tools. LisaCalc, which was a spreadsheet program, was widely used by businesses during its time, as it allowed users to enter data, and reuse cells by simply typing over them, unlike traditional spreadsheets having to erase and rewrite figures. There was also, LisaDraw, LisaGraph, LisaList (a database creation program), LisaProject, and LisaWrite. These programs all made use of the latest GUI technology, allowing the user to click to add features and implement functionality at various points. The Lisa also had customizable preferences for the system so that the user could control the startup volume (disk), and adjust speaker, keyboard, mouse, and screen settings; once again, all in an easy to navigate GUI.

Developed concurrently to the Lisa, was Apple’s Macintosh. Released in 1984, with a fantastic marketing campaign, the sales of the Macintosh crushed that of the Lisa. Released at a much more reasonable price tag of less than three thousand dollars, the Macintosh initially experienced great sales, however, this was short lived, and sales quickly began to diminish. One of the major differences between the Mac and the Lisa was the overall look and feel of the OS. While Lisa had fantastic, and revolutionary functionality, it felt much less personal than that of the Mac. The Mac placed a strong emphasis on visual representation, such as displaying an icon for inserted disks upon insertion on the desktop, which users could then click and interact with. A very noticeable difference was also in that of the control panel, which was used to modify user preferences. Unlike the heavily text based preference customization on the Lisa, the Mac control panel was very pictorial in nature. While it may have been intended for this to result in an easier to understand interface, it stands to reason that with a completely pictorial representation, that it is possible that the user might become confused with some of the functionality, wondering what it is that certain icons represent.

Significant changes in usability and functionality came with the introduction of MacOS 1.1 however, which would further improve the experience it provided users. Some of the new functionality included the ability for users to drag the cursor to select multiple objects, which allowed the user to perform an operation on more than one item at a time, adding to efficiency and the user experience. The Finder utility also included a “close all” function, which allowed the users to clear all of the currently open windows, and return to their desktop.

In contrast to Apple, who developed their systems as one piece, hardware and software, Microsoft took a much different approach. Their vision was to deploy their software onto as many machines as possible, which was made possible thanks to the relationship they had with IBM. By the time the Macintosh was released, Microsoft already had a business relationship with IBM, having provided them with MS-DOS. Windows 1.0 was Microsoft’s initial attempt at a GUI OS, however it lacked a lot of the features and functionality found in the Macintosh, such as the ability to have overlapping windows. This crippled the ability to multi-task for the user, as only so much could be done at once. In addition, the OS itself was really just MS-DOS running GUI programs behind the scenes, which further hindered its potential. The overlapping of windows would change in v2.0, in addition to added application support, such as Word and Excel, and was bundled with AT&T computers.

For Microsoft, the major turning point was the release of Windows 3.0, which would go on to become the first version of Windows to become widely adopted. It bolstered several UI improvements, such as being able to drag and drop objects from different folders in different windows, as well as improvements to elements such as the control panel, which included larger buttons, and a much more organized look and feel. It also made use of hypertext in its help files, which allowed users to navigate information much easier than having to scan a document with a large amount of text. Improvements were also made to the paint program, which allowed users to get a much more robust experience. Later in windows 3.1, Microsoft also added the functionality to allow users to remove files relating to specific programs or folders, similar to Add/Remove programs in modern versions of Windows, preventing the user from having to manually delete the desired files. In Windows 3.11, Microsoft Mail, an early email application was added for the Workgroups edition, which looks very similar to Outlook today, as well as Scheduler, a scheduling application very similar in look and feel to Outlook Calendar.

The grueling fight between Microsoft and Apple raged on in the mid 1980’s and into the 1990’s. At several points, Apple attempted to sue Microsoft, on the basis that the look and feel of Apple’s OS, which was copyrighted, was stolen by Microsoft. However, to be fair, much of Apple’s inspiration was, to a certain extent, stolen from the Alto. The competition between these two companies has changed the world we live in today, and even though Apple was once thought to be broken and beaten, it has shown that they will continue to be around for a long time, as their products continue to grow in market share. Amongst users, it is commonly accepted that Apple’s MacOS is a much more enjoyable experience for the user, while Windows dominance is the result of compatibility, and at one point, activities that became the point of an antitrust law suit. Regardless of what anyone thinks of either company, they have both done their part to bring us into the computing world we now live in today, and surely, they could not have done it without the shoulders of whom they stood on.

Image source:

www.thelearningbarn.org/index.html

Seymour Papert: Visions of Turtles Danced in His Head

by Lillian C. Spina-Caza

“In my vision the child programs the computer and, in doing so, both acquires a sense of mastery over a piece of the most modern and powerful technology and establishes an intimate contact with some of the deepest ideas from science, from mathematics, and for the art of intellectual model building” -- Seymour Papert, Mindstorms (5).

“Papert sees the computer presence as a potential agent for changing not only how we do things but also how we grow up thinking about doing things” – Cynthia Solomon writing about Papert in Computer Environments for Children (112).

It is impossible for me not to regard Seymour Papert as a jolly-Old-Saint-Nick sort of fellow. It isn’t just the photographs I have come across of Papert (like the one above), with his round, red cheeks and full beard, which inspire this association. Although I could argue there truly is an uncanny resemblance (have you seen the original Miracle on 34th Street)? No, it is Papert’s 40-year career dedicated to enriching the intellectual lives of children that makes this association possible. Papert’s contributions to the field of HCI and educational technology are significant because of his unwavering belief in the abilities of all children to learn. From the start, he did not view children as passive consumers of technology, but as active producers of knowledge while interacting with computer technology. “In the LOGO environment…[t]he child programs the computer. And in teaching the computer how to think, children embark on an exploration about how they themselves think…Thinking about thinking turns the child into an epistemologist, an experience not even shared by most adults” (Papert, 1980, 11).

According to his biography, available at The Learning Barn Seymour Papert Institute website, Papert -- a native of South Africa and an anti-apartheid activist in the early 1950’s – joined the faculty of Witwaterstrand University when he was in his twenties. Between 1954 and 1958, Papert conducted mathematical research at England’s Cambridge University and the Institut Henri Poincare in Paris. It was around this time he began to make the connections between mathematics and the fields of artificial intelligence and cognitive science which led to an invitation by Jean Piaget to join his Centre d’Epistemologie Genetique in Geneva, where Papert devoted four years to studying how children learn (Papert Biography, ¶2).

In 1964, Papert was invited to MIT as a research associate, was eventually made Professor of Mathematics and later became Professor of Learning Research. In the late 1960s, Papert worked with Wallace Feurzeig’s Logo programming language – the first programming language designed for children – extending it to include “ ‘turtle graphics,’ in which kids were able to learn geometric concepts by moving a ‘turtle’ around the screen” (Bruckman, et. al, 804). The underpinnings of Papert’s Logo work were his convictions that children learn best by doing. Computers provided a dynamic framework where “processes can be displayed and played with, processes that act on and with numbers” (Solomon, 118).

In Mindstorms: Children, Computers and Powerful Ideas (1980), a seminal work on how children learn, Papert writes, “I take from Jean Piaget a model of children as builders of their own intellectual structures. Children seem to be innately gifted learners, acquiring before they go to school a vast quantity of knowledge by a process I call ‘Piagetian Learning,’ or learning without being taught’” (7). Piaget’s constructivist theory, now called discovery learning, influenced Papert’s constructionist principle which refers to “everything that has to do with making things and especially to do with learning by making, an idea that includes, but goes far beyond the idea of learning by doing” (Papert, 1999,viii). Both Piaget’s and Papert’s concepts of how children learn and think have been influential in shaping educational policy over the past several decades.

Papert happened to be working at a pivotal point in both the history of computers and education. The 1960s and 1970s saw a "deschooling movement" influenced by education critics who began to express “concerns that public schools were preaching alien values, failing to adequately educate children, or were adopting unhealthy approaches to child development” (Home-Schooling, ¶4). As a result, alternative, child-centered schooling emerged as a grassroots revolution and expanded to include a variety of available educational choices, from home-schooling to religious and private not-for-profit schools, technological schools, and alternative public magnet, and charter schools. “The concept of alternative schooling, which first emerged as a radical idea on the fringe of public education, evolved to a mainstream approach found in almost every community in the United States and increasingly throughout the world” (Alternative-Schooling, ¶ 13). Both Papert and Piaget were working at a time when new ideas and changes were evolving in modern education. Numerous developments occurring in the field of computer science at the time Papert came to MIT, also helped shape his work.

The early 1960s onward saw an “outpouring of ideas and systems tied to the newly realized potential of computers” (Gruin, 4). IPTO funding from the United States government was being used by researchers like J.C.R. Licklider, Marvin Minsky, and John McCarthy to expand computer science departments, develop the field of Artificial Intelligence, and conceive of the Internet (Gruin, 4). Wesley Clark, who worked with Licklider, and was instrumental in building the TX-0 and TX-2 at MIT’s Lincoln Labs, put Boston on the map as center of computer research (Gruin, 5). Ivan Sutherland, whose 1963 PhD thesis describing the Sketchpad system built on the TX-2 platform with the goal of “making computers ‘more approachable’ ”(Gruin, 5). Technological developments such as Sketchpad, which launched the field of computer graphics, would be instrumental to Papert’s work. Others visionaries who made contributions to and advanced the field of HCI like Douglas Engelbart, who had a conceptual framework for the augmentation of man’s intellect and supported and inspired programmers and engineers (Gruin, 5), paved the way for the work that Papert and others would contribute to the field.

Though he is probably best known for the development of Logo, Papert was instrumental in shaping child-computer interactions by inspiring others to think differently about computers. For example, his work with children and Logo led to the design of a variety of programming languages intended for children. Papert is also credited with inspiring Alan Kay, a pioneer of object-oriented programming languages and developer of the concept of modern personal computing, to design the Dynabook, laptop personal computer for children (Kay, ¶9). More recently, both Kay and Papert have been involved with Nicholas Negroponte’s and Joe Jacobson’s One Laptop Per Child (OLPC) Association, a not-for-profit corporation with the mission of forcing the price of laptops down to a level that would make “one laptop per child” feasible on a global scale (Papert Biography, ¶7) .

Not only renowned in the field of computer science, Papert was also first and foremost an experienced mathematician with a theory about how children learn – a theory influenced by his early work with Swiss philosopher and psychologist Jean Piaget. According to Cynthia Solomon, one of Papert’s former MIT colleagues and author of Computer Environments for Children, “Piaget’s research provided Papert with a large body of successful examples describing children’s learning without explicit teaching and curricula. Papert sees Piaget as ‘the theorist of what children can learn by themselves without the intervention of educators’ (Papert 1980d. p.994)” (Solomon, 111).

Papert himself describes one of Piaget’s most important contributions to modern education was his belief that “that children are not empty vessels to be filled with knowledge (as traditional pedagogical theory has it), but active builders of knowledge—little scientists who are constantly creating and testing their own theories of the world” (Papert, 1999). Inspired by Piaget’s work, Papert came to view learning as a constructive process. As Solomon explains:

He [Papert] believes that one of Piaget’s most important contributions is not that there are stages of development but that people possess different theories about the worlds. Children’s theories contrast sharply with adult theories. Piaget showed that even babies have theories, which are modified as the children grow. For Papert, the process by which these theories are transformed is a constructivist one. Children build their own intellectual structures. They use readily available materials within their own cultures (Solomon, 103).

The work of Piaget and other mathematicians in the 1960s offered alternative ways to the formal Principia Mathematic approach to teaching mathematics that reduced all math to logic and emphasized formula. Piaget’s work revealed logic is not fundamental but is developed over time as children interact with the world. French mathematician Nikolas Bourbaki supported the point of view that “number is not a fundamental idea but is instead constructed; the structures are built up from other structures and, for Piaget, from experiences with the immediate environment and culture” (Solomon, 117).

Papert not only built upon the work of Piaget and Bourbaki, but also that of other mathematicians such as Robert B. Davis who views learning as discovery, drawn from a child’s every day experiences, and that of Tom Dwyer who maintains creating conditions favorable to exploration lead to discovery and effective learning. Dwyer sees the computer as an expressive medium and as a source of inspiration to teachers and students, as well as a means by which students can engage in solo learning (Solomon, 11). Papert also imagines the computer as an expressive medium, a carrier of powerful ideas, and an intellectual agent in a child-centered, child directed environment (Solomon, 131).

In Mindstorms, Papert presents two fundamental ideas: first, computers can be designed in such a way that learning to communicate with them is a natural process akin to learning French while living in France, and second, learning to communicate with a computer may change the way other learning takes place (6). Papert explains:

We are learning how to make computers with which children love to communicate. When this communication occurs, children learn mathematics as a natural language. Moreover, mathematical communication and alphabetic communication are thereby both transformed from the alien and therefore difficult things they are for most children into natural and therefore easy ones. The idea of ‘talking mathematics’ to a computer can be generalized to a view of learning mathematics in ‘Mathland’; that is to say, in a context which is to learning mathematics to what living in France is to learning French (6).

The mathland metaphor for Papert, becomes a way to question deep-seated assumptions about human abilities (6) and to challenge the social processes that contribute to the construction of ideas about what children can learn, how they learn it, and when. “A central idea behind our learning environments was that children would be able to use powerful ideas from mathematics and science as instruments of personal power” (212). Although early research did not shore up the idea that computer programming improves general cognitive skills, Papert’s work has inspired the development of numerous educational or construction kits designed to appeal to a user’s interests and experiences (Bruckman et. al, 2008).

On December 5, 2006, while attending the International Commission on Mathematical Instruction at Hanoi Technology University in Vietnam, Papert was struck by a motorcycle and suffered severe brain injuries. He fell into a coma and needed two brain surgeries, after which he spent more than two years in and out of hospitals dealing with several complications resulting from the accident. According to the The Learning Barn Seymour Papert Institute website, updated last in March of 2008, Papert’s health has been steadily improving and on February 29, Papert celebrated his 80th birthday.

He looks wonderful, is in good physical health and is back to his normal weight… He is slowly getting back to the computer, enjoys watching videos of his lectures and seminars and is beginning to look at notes from his unfinished book… Doctors say that eventual recovery from such serious brain injuries takes one to two full years and judge that because of the complications he suffered this time must be counted from May 2007 (Papert Institute, ¶ 20).

May 2009 is still several months away, and though I do not presume to guess what’s on Papert’s mind, it is my hope that visions of computers and kids are dancing in his head and that, perhaps, one day he will be able to share these ideas and more about how to “benefit the minds and lives of the children of the world” with the rest of us (Papert Institute, ¶ 20).

Works Cited:

Alternative-Schooling. “International Alternative Schools,” (¶ 13). Available at

http://education.stateuniversity.com/pages/1746/Alternative-Schooling.html [2008, September 14]

Bruckman, A., Bandlow, A. & Forte, A. (2008). HCI for Kids. In A. Sears and J.A. Jacko (Eds.), The Human-

Computer Interaction Handbook: Fundamentals, evolving technologies and emerging applications (pp.

804-805). NY: Lawrence Erlbaum Associates.

Grudin, J. (2008). A Moving Target: The Evolution of HCI. In A. Sears and J.A. Jacko (Eds.), The Human-

Computer Interaction Handbook: Fundamentals, evolving technologies and emerging applications (pp. 4-

5). NY: Lawrence Erlbaum Associates.

Home-Schooling. History. Available at http://education.stateuniversity.com/pages/2050/Home-Schooling.html

[2008, September 14]

Ishii, H. (2008). Tangible User Interfaces. In A. Sears and J.A. Jacko (Eds.), The Human-Computer Interaction

Handbook: Fundamentals, evolving technologies and emerging applications

(p.477) NY: Lawrence Erlbaum Associates.

Lazzaro, N. (2008). Why We Play: Affect and the Fun of Games. In A. Sears and J.A. Jacko (Eds.), The

Human-Computer Interaction Handbook: Fundamentals, evolving technologies and emerging

applications (p. 686). NY: Lawrence Erlbaum Associates.

Sutcliffe, A. (2008). Multimedia User Interface Design. In A. Sears and J.A. Jacko (Eds.), The Human-

Computer Interaction Handbook: Fundamentals, evolving technologies and emerging applications (p.

396). NY: Lawrence Erlbaum Associates.

Thomas, J.C., & Richards, J.T. (2008). Achieving Psychology Simplicity. In A. Sears and J.A. Jacko

(Eds.), The Human-Computer Interaction Handbook: Fundamentals, evolving technologies and emerging

applications (p. 498). NY: Lawrence Erlbaum Associates.

Zaphiris, P., Siang Ang, C. & Laghos, A. (2008). Online Communities. In A. Sears and J.A. Jacko

(Eds.), The Human-Computer Interaction Handbook: Fundamentals, evolving technologies and emerging

applications (p. 614). NY: Lawrence Erlbaum Associates.

Papert, S. (1999). Introduction: What is Logo? And Who Needs It? In Logo Philosophy and Implementation

Available at http://www.microworlds.com/company/philosophy.pdf [2008, September 14]

Papert, S. (1980). Mindstorms: Children, Computers, and Powerful Ideas. NY: Basic Books, Inc. Papert, S. (1999, March 29). Papert on Piaget. In Time "The Century’s Greatest Minds," (p. 105). Available at

www.papert.org/articles/Papertonpiaget.html [2008, September 13]

Solomon, C. (1986). Computer Environments for Children. Cambridge: MIT Press.

The Learning Barn Seymour Papert Institute. Report on Seymour Papert’s Condition: The History (¶ 20).

Available www.thelearningbarn.org/index.html [2008, September 13]

The Learning Barn Seymour Papert Institute. Seymour Papert Biography (¶4). Available at

www.thelearningbarn.org/AboutUs.html [2008, September 13]

Viewpoints Research Institute. Dr. Alan Kay. (¶1 ). Available at www.vpri.org/html/people/founders.htm

[2008, September 13]

For more information and readings by Seymour Papert visit his website at www.papert.org or visit The Learning Barn Seymour Papert Institute www.thelearningbarn.org/.

Morton Heilig, pioneer in virtual reality research

Morton Heilig (1926-1997) was an American cinematographer, theorist and inventor whom scholars credit with originating studies of virtual reality through his work in immersive multimedia, and whose theory and inventions were influential in later designs of virtual reality devices.

CINEMA OF THE FUTURE

Heilig was inspired by the Cinerama, a technique that used three cameras to project movies onto an arced widescreen, expanding the area of viewing space for the audience. In his 1955 essay, "The Cinema of the Future," Heilig writes that Cinerama, as well as 3-D films, which had only recently entered widespread usage, was a logical step in the evolution of art: "The really exciting thing is that these new devices have clearly and dramatically revealed to everyone what painting, photography and cinema have been semiconsciously trying to do all along -- portray in its full glory the visual world of man as perceived by the human eye" (p. 244). Heilig wanted to expand this replication of reality beyond the available senses of sight and sound to create the future cinema after which he named his essay.

Heilig begins his study of the cinema of the future, not by examining the relationship between film and viewer, but by trying to learn, "how man shifts his attention normally in any situation" (1955/2002, p. 249). Heilig's study of attention deals with which of each of the five senses are being used at any given point in an experience. He tells us that senses are monopolized in the following proportions (p. 247):

- Sight: 70%

- Hearing: 20%

- Smell: 5%

- Touch: 4%

- Taste: 1%

Heilig also identified the various organs that were, "the building bricks, which when united create the sensual form of man's consciousness": the eye with 180 degrees of horizontal and 150 degrees of vertical three dimensional color view; the ear, able to discern pitch, volume, rhythm, sounds, words and music; the nose and mouth detecting odors and flavors; the skin registering temperature, pressure and texture (1955/2002, p. 245).

The goal of future cinema, said Heilig, was to replicate reality for each of these senses. He advocated theaters that would make us of magnetic tape with separate tracks for each sense -- the intensity of odors to be piped in through air conditioning systems, moving pictures that extended beyond the peripheral vision, stereophonic sound delivered by dozens of speakers. Sensory stimulations would be provided in the proportions at which they naturally occurred (as listed above). Attention would be directed and maintained not through use of cinematic tricks, but by mimicking the natural focusing functions of the human eye. Through the creation of multi-sensory based theatric, man would better learn to manipulate the "sense materials," to develop and arrange greater forms of art (1955/2002, p. 251).

With these procedures, based on the natural biology of man and applied to the methodology of art, Heilig believed that, "The cinema of the future will become the first art form to reveal the new scientific world to man in the full sensual vividness and dynamic vitality of his consciousness" (1955/2002 p. 251).

Unfortunately, Heilig's ideas met with the same problem that he cites in his essay as delaying the development of 3D and films with sound: short-sighted financiers unwilling to provide funding. Before writing the essay, Heilig had already pitched his ideas in Hollywood, had them roundly rejected, and moved to Mexico City where he found them better received (Packer & Jordan, 2002, p. 240). In fact, "The Cinema of the Future" was originally published in Spanish, in the Mexican architectural journal Espacios (later reprinted in English by MIT in 1992).

SENSORAMA

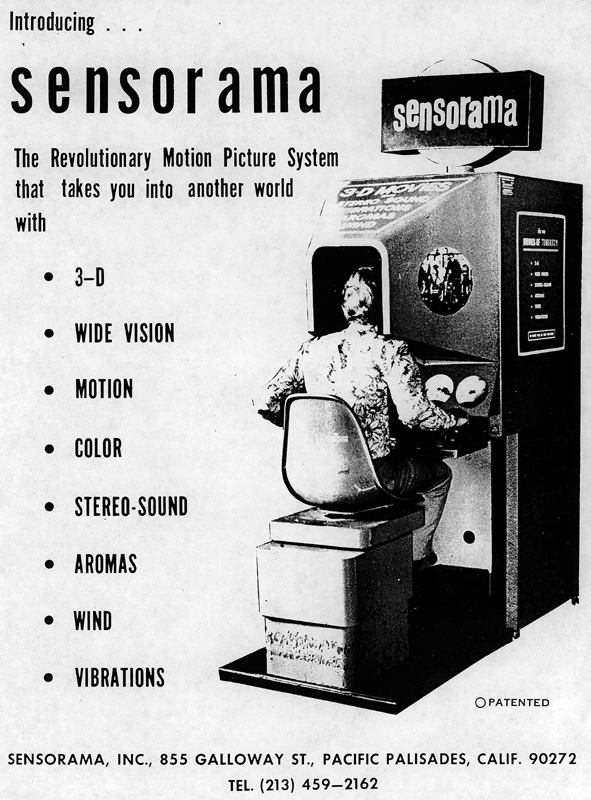

After his theories about immersive theatre fell on mostly deaf ears, Heilig decided to take things into his own hands, developing (while completing his Communication Master's at the Annenberg School at the University of Pennsylvania) a device that would put his theories into practice. Heilig called the device, which resembled a 1980s-era arcade game, the Sensorama Simulator.

In his patent for the Sensorama (filed in 1961), Heilig stresses the pedagogical potential for the device, discussing, for instance, the armed services, who, "must instruct men in the operation and maintenance of extremely complicated and potentially dangerous equipment, and it is desirable to educate the men with the least possible danger to their lives and to possible damage to costly equipment" (p. 9). The default experience that shipped with the short run of Sensorama, however, was not a replication of the battlefield, but a series of journeys, including a motorcycle ride through Brooklyn (complete with seat vibrations mimicking the motor of the bike, the smell of baking pizza, wind from strategically placed fans, voices of people walking down sidewalks) and a view of a belly-dancer (with cheap perfume).

Howard Rheingold, in his 1991 book Virtual Reality: Exploring the Brave New Technologies, describes his own usage of the Sensorama, 30 years after its invention:

By virtue of its longevity, it was a time machine of sorts. I sat down, put my hands and eyes and ears in the right places, and peered through the eyes of a motorcycle passenger at the streets of a city as they appeared decades ago. For thirty seconds, in southern California, the first week of March, 1990, I was transported to the driver's seat of a motorcycle in Brooklyn in the 1950s. I heard the engine start. I felt a growing vibration through the handlebar, and the 3D photo that filled much of my view came alive, animating into a yellowed, scratchy, but still effective 3D motion picture. I was on my way through the streets of a city that hasn't looked like this for a generation. It didn't make me bite my tongue or scream aloud, but that wasn't the point of Sensorama. It was meant to be a proof of concept, a place to start, a demo. In terms of VR history, putting my hands and head into Sensorama was a bit like looking up the Wright Brothers and taking their original prototype out for a spin. (p. 50)

Sensorama failed to catch on, and faded away due to financial issues, but, writes Joseph Kaye in 2001, the machine still remains the pinnacle in some aspects of immersive experience, notably in utilization of olfactory stimulus.

TELESPHERE MASK

Even before the Sensorama, however, Heilig had worked on another patent that would have also proved influential on future virtual reality designers, had they heard of it: the Telesphere mask, awarded a patent in 1960 under the name, "Stereoscopic-Television Apparatus For Individual Use."

The Telesphere mask was a sort of head-mounted version of the Sensorama that would allow for wrap-around views, stereo sound, and air currents that could blow at different velocities or temperatures, and could carry smell (Heilig, 1957). The Telesphere was the first patented head-mounted apparatus designed to convey virtual views to the user, predating Ivan Sutherland's influential "Head-Mounted Three-Dimensional Display," nicknamed the "Sword of Damocles" by half a decade. The mask was built as a prototype, but Rheingold surmised in 1991 that, "If it had not been for the vicissitudes of research funding, Morton Heilig, rather than Ivan Sutherland, might be considered the founder of VR" (p. 46).

...And shortly after, backed by exposure from Rheingold's first-hand reports of experiencing Heilig's work, Heilig's reputation as a pioneer in virtual realities studies and technology grew. He spoke in late 1991 at the CyberArts International Conference, where his speech was received as, "a fascinating picture of a man whose vision far outpaced those with the resources to bring his ideas into reality...there was no doubt that Heilig deserves respect for his pioneering work" (Czeiszperger & Tanaka, 1992, p. 93).

LASTING INFLUENCE

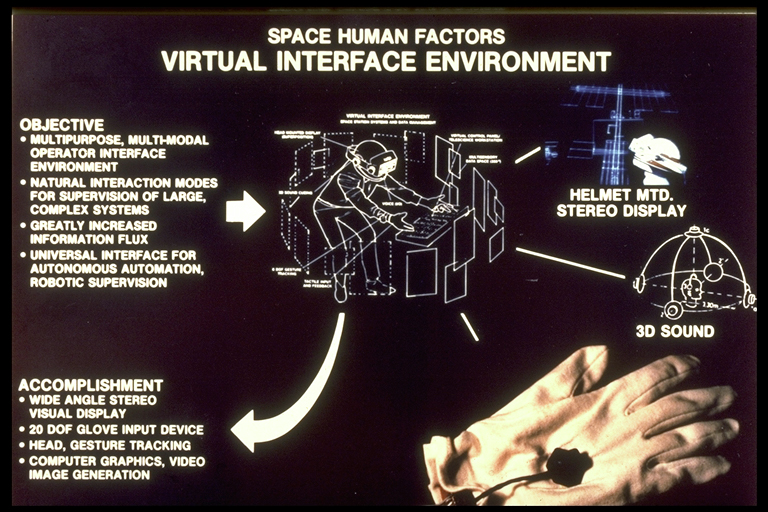

Rheingold was turned onto Heilig's work by a technologist named Scott Fisher, who had the Sensorama poster displayed above in his office at the NASA Ames Research Center (1991, p. 51). Fisher's work as founding Director of the Virtual Environment Workstation Project (VIEW) at NASA led to work that helped create the head-mounted viewer and glove that has been associated with virtual reality since the mid-1980s.

Fisher cited Heilig's work with the Sensorama as an influence in his 1991 essay, "Virtual Environments, Personal Simulation & Telepresence," and discussed the major shortcoming of the device: the fact that the user couldn't independently move or change their viewpoint (p. 3). These decisions were made, as Heilig discussed in his essay above, by the director of the Sensorama film.

Earlier, Fisher had worked with a project called the Aspen Movie Map that utilized ideas started by Heilig's Sensorama and expanded them to allow for autonomy on the part of the user. The project involved the virtualization of Aspen, Colorado, in which users could freely tour the city, moving forward and backwards down streets (similar to Google Maps Street View) and even inside some buildings. In one configuration, Fisher writes, the user was surrounded on all sides by views of Aspen, similar to Heilig's wish for an image that would fill the whole of a viewer's vision (1991, p. 3).

At NASA, Fisher was a pioneer in what became to be known as "telepresence," or the concept of "being there" without actually being there. The headset and glove developed in the VIEW labs allowed for the user to have three senses virtually stimulated: sight, sound and touch. A headset with stereo speakers, similar to the Telesphere, allowed views in three dimensions that responded to motion of the user's head. The glove allowed for feelings of pressure, as mandated by Heilig's essay, that would allow the user to, for instance, remotely grasp things with a robot arm that would relay tactile responses (Fisher, 1991, p. 4).